Have you ever had a log source you would like QRadar to parse but IBM does not support it at this time?! Then you need to know how to build your own.

So I put together what I assume is a unique log pattern as shown below

----------- start of sample logs ------------

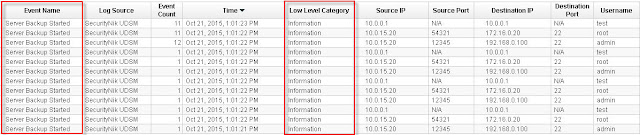

Fri Mar 21 15:10:49 2014: hostname:10.0.0.1 info:Backup Started by user:admin pid:27387 source: 10.0.15.20 sport:12345 destination:192.168.0.100 dport:22 protocol:tcp

Fri Mar 21 15:10:49 2014: hostname:10.0.0.1 info:Backup Started by user:root pid:27387 source: 10.0.15.20 sport:54321 destination:172.16.0.20 dport:22 protocol:udp

Fri Mar 21 15:10:49 2014: hostname:10.0.0.1 info:Backup Started by user:test pid:27387 source: 10.0.15.20 destination:10.11.12.13 protocol:icmp

----------- end of sample logs ------------

Now that we have our logs, let's identify the information which we can extract as it relates to the Log Source Extension (LSX) Template. The information of importance to me are:

DATE_AND_TIME

HOSTNAME

EVENT_NAME

USERNAME

SOURCE_IP

SOURCE_PORT

DESTINATION_IP

DESTINATION_PORT

PROTOCOL

The above matches quite well with what is in the template which can be downloaded from IBM support forums or below. As a result I take out the "pattern id" and the corresponding "matcher" for the ones which I do not plan to use. Examples of these are;

<pattern id="EventCategory" xmlns=""><![CDATA[]]></pattern>

...

<matcher field="EventCategory" order="1" pattern-id="EventCategory" capture-group="1"/>

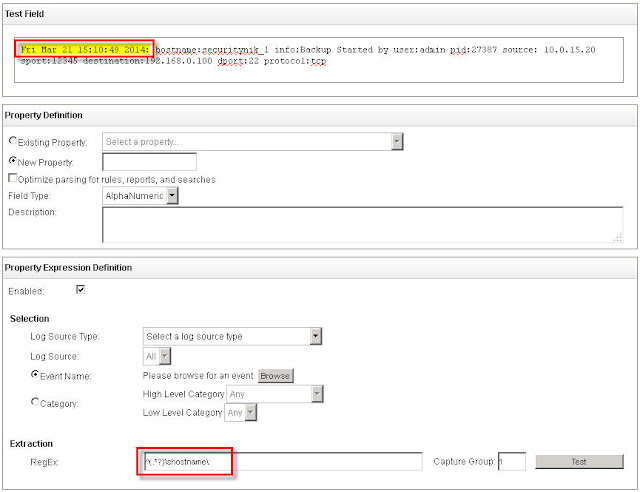

"Custom Event Properties", let's use that to build and test our Regex.

In my case, my regex look as follows without the quotes and all uses "Capture Group" 1

DATE_AND_TIME - Regex: "^(.*?)\shostname\:"

HOSTNAME - Regex: "\shostname\:(.*?)\sinfo"

EVENT_NAME: - Regex: "\sinfo\:(.*?)\:"

USERNAME - Regex: "\Started\sby\suser\:(.*?)\spid"

SOURCE_IP - Regex: "\spid\:\d{1,5}\ssource\:\s(\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3})\ssport\:"

SOURCE_PORT - Regex: "\ssport\:(\d{1,5})\sdestination\:"

DESTINATION_IP - Regex: "\sdestination\:(\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3})\sdport"

DESTINATION_PORT - Regex: "\sdport\:(\d{1,5})\sprotocol\:"

PROTOCOL - Regex: "\sprotocol\:(tcp|udp|icmp)"

To access the "Custom Event Properties" from the "Admin" tab, select "Custom Event Properties" then "Add".

Note this is for testing so please don't select "Save" once completed.

See below for the example in which I extract the date and time from the logs.

Now that we have our regex, let's build out our Log Source Extension (LSX)

I will append "-UDSM-TEST" to all the pattern ids. (I don't think this is needed but it is recommended that you append something to the default).

Next I will incorporate my regex in the various field. To do this, the regex needs to be placed beteween the CDATA. So "<![CDATA[]]>" now becomes "<![CDATA[MY REGEX GOES IN HERE]]>"

Additionally, because I have username (identity data) in the log, I will change 'send-identity="OverrideAndNeverSend"' to 'send-identity="OverrideAndAlwaysSend"'

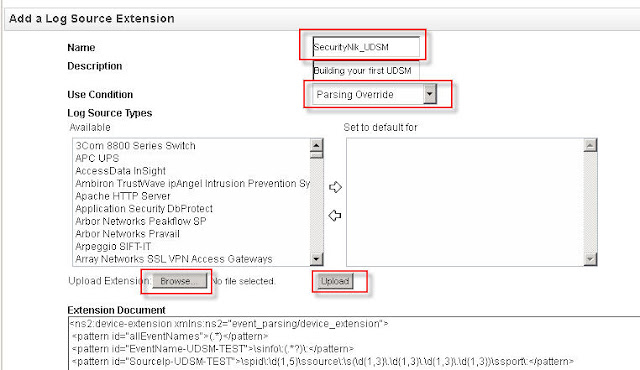

Now that we have built our LSX, let's look at uploading this to QRadar.

From the "Admin" tab, select "Log Source Extensions". From this window now select "Add". Enter your UDSM name and select "Browse" to select your file and then "Upload" to .... well you guessed it upload the file.

If there is no issue, you should see your file loaded in the screen below. If you encounter errors, then you will need to address the issue in your LSX file. Once all is good, click "Save"

Below shows what a successful upload looks like. Provide the name and ensure that the "Use Condition" is set to "Parsing Override".

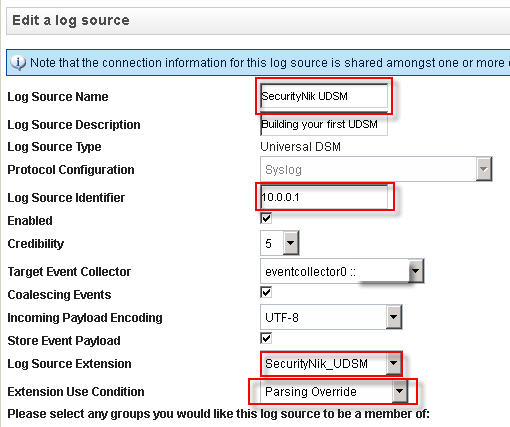

Adding your log source

Now that we have our LSX. Let's add the log source which will be forwarding the logs.

From the "Admin" tab select "Log Sources". From the "Log Sources" window, click "Add".

Once you have finished creating your log source, it is time to now "Deploy Changes" under the "Admin" tab.

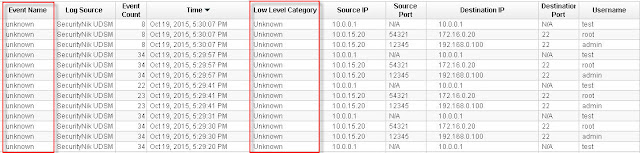

So we have made progress but obviously we still have issues as some part of the log activity still shows unknown. Consider this good news as at least we know the data is being seen in QRadar.

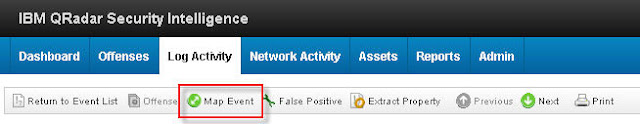

Let's next double click on one of the "unknown" events. From the window select "Map Event". The objective here is to provide QRadar with an understanding of what the previous values represent, thus we need to map these to their equivalent QID.

Now that we have clicked "Map Event", let's go ahead and provide the necessary mappings.

Once everything goes well, you should see the following "The event mapping has been successfully saved. All future events matching these criteria will be mapped to the specified QID." which in this case is "59500166"

Once everything goes well, you should see the following "The event mapping has been successfully saved. All future events matching these criteria will be mapped to the specified QID." which in this case is "59500166"

Voila, there you go, you have now built your first UDSM.

As always, hope you enjoyed reading this post. Maybe you can leave a comment to let me know if this was helpful.

If you use QRadar and would like me to consider doing more work on specific areas of QRadar leave a comment and I will see what's possible.

For further guidance on this see the references below:

References:

My LSX Example

https://www.ibm.com/developerworks/community/forums/html/topic?id=77777777-0000-0000-0000-000014970193